Incremental Data Loading in Qlikview

Why Incremental Load? how it is different from normal data loading approach?

In large scale industries in production environment business applications there are lots of frequent transaction of data happens, so in a due course of time the volume of the database become substantially large. And those Large transnational tables can be significantly time consuming in a reload. Taking advantage of Qlikview’s incremental load logic can reduce that time considerably. An incremental load is a process of simply appending new records or updating specific records in an existing QVD. There are three key scripting options available for incremental loads.

- Insert Only

- Insert & Update

- Insert, Update, & Delete

Each of these three scenarios is designed to run once an INITIAL LOAD has occurred. An initial load is a task that creates the source QVDs. These QVDs from then on can be optimized to reload with one of the following incremental load scripts. Since an incremental load is designed to pull only new or altered data, a source QVD is needed to hold all non-modified information and must exist before an incremental load can run.

1. Insert Only

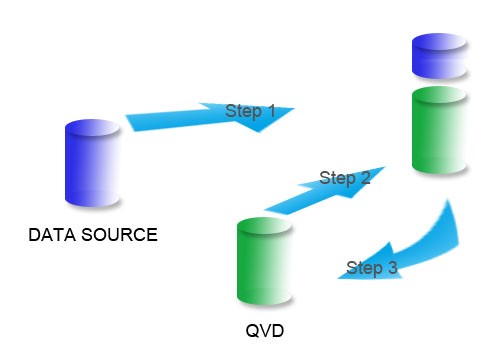

For an INSERT ONLY scenario, there is the assumption that new data will not create duplicate records. There is no set way to determine NEW data, so this must be reviewed case by case. Once a method for finding new records is determined, the reload process is a simple three step process.

- Load all NEW data from the data source

- Concatenate this data with a load of all data from the QVD file

- Store the entire table out to the QVD file

As long as the QVD is named the same, this will overwrite the previous QVD so the process can repeat for the next reload.

2. Insert & Update

The INSERT & UPDATE scenario also takes new data from the source but it also pulls in updated records. Additional precautions need to be taken in order to avoid duplicate records. During the load from the QVD, exclude records where there is a match on the primary key. This will ensure that the updated records will not be duplicated.

- Load all NEW and UPDATED data from the data source

- Concatenate this data with a load of only the missing records from the QVD file

- Store the entire table out to the QVD file

Example of Script

Data:

SQL SELECT

PrimaryKey,

A,

B,

C

FROM DB_Table

WHERE ModifyDate >= $(vDate);

CONCATENATE

LOAD

PrimaryKey,

A,

B,

C

FROM Data.qvd

WHERE NOT exists (PrimaryKey);

STORE Data into Data.qvd;

Using the Exists() function keeps the QVD from loading the obsolete records since the UPDATED version is currently in memory.

3. Insert, Update, & Delete

An INSERT, UPDATE, & DELETE script is very similar to the load process of the INSERT & UPDATE, however there is an additional step needed to remove deleted records. The most effective method is to load all the PrimaryKeys from the source and then apply an inner join. This will achieve the delete process.

- Load all NEW and UPDATED data from the data source

- Concatenate this data with a load of only the missing records from the QVD file

- Inner join all PrimaryKeys from the data source

- Store the entire table out to the QVD file

Example of Script

Data:

SQL SELECT

PrimaryKey,

A,

B,

C

FROM DB_Table

WHERE ModifyDate >= $(vDate);

CONCATENATE

LOAD

PrimaryKey,

A,

B,

C

FROM Data.qvd

WHERE NOT exists (PrimaryKey);

INNER JOIN

SQL SELECT

PrimaryKey,

FROM DB_Table;

STORE Data into Data.qvd;

Very large data sets can take a long time to load and greatly effect the performance of your QlikView documents over time. By implementing QVD optimization with incremental loads, this technique can be employed to perform faster loads in less time, utilizing less system resources.